www.jonnytrees.comThanks!

Jonny Trees Online

Experiences with the .NET framework, SharePoint and Dynamics CRM

Sunday, December 4, 2016

Tuesday, June 30, 2015

Upload files to Exchange Public Folders using PowerShell

The Script

The Public Folders store name highlighted in yellow must be changed to the value you see in Outlook when browsing Public Folders:

Other parameters to change are highlighted in green.

| # Get Start Time Write-Host "-----------------------------" $start = (Get-Date) Write-Host ("Start time: " + $start) Write-Host "-----------------------------" # 0 Setup source and target (leave no trailing \) $source = "C:\Apps\Upload to PF\Batch 1" $targetFolder = "All Public Folders\Test\Batch 1" $failArray = @() # 1 Initiate connection to Outlook $outlook = new-object -comobject Outlook.Application $mapi = $outlook.GetNamespace("MAPI") # 2 Connect to PF $pfStore = $mapi.Session.Stores.Item("Public Folders - Jonny.Trees@youremailaddress.com") # 3 Connect to folder in PF $currentFolder = $pfStore.GetRootFolder() $folderPathSplit = $targetFolder.Split("\") for ($x = 0; $x -lt $folderPathSplit.Length; $x++) { $currentFolder = $currentFolder.Folders.Item($folderPathSplit[$x]) } if ($currentFolder -eq $null) { ("Could not find folder: " + $folderPath) return } Write-Host "" ("Found folder: " + $currentFolder.FolderPath) Write-Host "" # 4 Get the source files and volume $volume = ("{0:N2}" -f ((Get-ChildItem -path $source -recurse | Measure-Object -property length -sum ).sum /1GB) + " GB") $volumeMB = ("{0:N2}" -f ((Get-ChildItem -path $source -recurse | Measure-Object -property length -sum ).sum /1MB) + " MB") ("Volume to be moved: " + $volume + ", " + $volumeMB) Write-Host "" $source = $source + "\*" $sourceFiles = Get-ChildItem -path $source -include *.msg # 5 Copy the files $counter = 1 $failCounter = 0 foreach($file in $sourceFiles) { try { $item = $mapi.OpenSharedItem($file) Write-Host -nonewline ("Moving file " + $counter + " of " + $sourceFiles.Count + " ") [void]$item.Move($currentFolder) Remove-Item -literalpath $file Write-Host "Success" } catch { $failArray += $file $failCounter = $failCounter + 1 Write-Host "Fail" } finally { $counter = $counter + 1 } } [System.Media.SystemSounds]::Exclamation.Play(); # Get End Time $end = (Get-Date) $scriptExecutionTime = $end - $start # Print results Write-Host "" Write-Host "-----------------------------" Write-Host ("End time: " + $end) Write-Host "-----------------------------" Write-Host "" Write-Host ("Fail count: " + $failCounter) Foreach ($file in $failArray) { Write-Host $file } Write-Host "" Write-Host ("" + ($counter - $failArray.Count -1 ) + " of " + ($counter - 1) + " files totalling " + $volume + " were uploaded successfully") Write-Host ("Script execution time: " + $scriptExecutionTime.hours + " hours, " + $scriptExecutionTime.minutes + " minutes and " + $scriptExecutionTime.seconds + " seconds") Write-Host ("Average time per file: " + [math]::Round($scriptExecutionTime.totalseconds/$sourceFiles.Count,1) + " seconds") |

Output

The output of the script is as follows.| ----------------------------- Start time: 06/30/2015 11:32:32 ----------------------------- Found folder: \\Public Folders\All Public Folders\Test\Batch 1 Volume to be moved: 0.02 GB, 20.03 MB Moving file 1 of 3 Success Moving file 2 of 3 Fail Moving file 3 of 3 Success ----------------------------- End time: 06/30/2015 11:32:45 ----------------------------- Fail count: 1 C:\Apps\Upload to PF\Batch1\Large file.msg 2 of 3 files totalling 0.02 GB were uploaded successfully Script execution time: 0 hours, 0 minutes and 13 seconds Average time per file: 4.3 seconds |

Tuesday, February 18, 2014

Very high opening/viewing numbers in audit log

I recently had an issue where I was seeing crazy numbers of downloads from a document library in the site collection audit logs - 20k downloads from one user across a two day period when the library only contained 300 documents. The culprit? Outlook. Any client that allows a user to sync the contents of a document library, will write entries into the audit log every time a sync occurs. Outlook is one application that most users have that will allow syncing offline. This is initiated via a button in the Ribbon of the document library:

Fortunately there’s a workaround. In the Advanced Settings of the document library, there’s an option to prevent offline clients from syncing content from the library:

If you make this change to a library:

- New connections to the library can still be created by clicking “Connect to Outlook” but the sync will never succeed

- Existing connections will still exist in Outlook but attempts to sync will fail:

- This message only briefly appears on-screen when you manually do a “Send / Receive”

- When “Send / Receive” is done in the background, the user is not warned with the above message

- There is also no visual indication that the syncing of a library no longer occurs which may or may not be a good thing

I also tested this with Colligo Email Manager, and unfortunately changing the “Offline Client Availability” has no effect – Colligo could still sync the contents of the library offline.

Monday, June 17, 2013

Posting multiple parameters to a Web API project using jQuery

*Note that this is a good example of how to pass multiple parameters to a Web API method when you take the issue discussed into consideration

The Controller

public class UserDTO

{

public int userID;

public string username;

}

[HttpPost]

public string Post([FromBody]UserDTO userDTO)

{

return userDTO.userID.ToString() + " " + userDTO.username;

}The Web API route config

config.Routes.MapHttpRoute(

name: "DefaultApi",

routeTemplate: "api/{controller}/{id}",

defaults: new { id = RouteParameter.Optional }

);The jQuery

var apiUrl = "http://localhost:55051/api/User";

var userDTO = {

userID: 5,

username: "testuser"

};

$.ajax({

type: "POST",

url: apiUrl,

data: JSON.stringify(userDTO),

datatype: "json",

contenttype: "application/json; charset=utf-8"

)};Fiddler Output

{"userID":5,"username":"testuser"}On Execution

userID = 0

username = nullThe Issue?

Case sensitivity on the .ajax jQuery call:- datatype should be dataType

- contenttype should be contentType

Wednesday, December 15, 2010

Moving a Records Center: Bad Idea

Moving a SharePoint 2010 records center will cause several issues of varying significance.

Document Management Implications:

- If you have a custom Send To location allowing users to submit documents to the record center, this URL will need to be updated

- A simple change

- If your Send To location leaves a reference to the file in the original location, this will be broken:

- When clicking on the file reference you will see an error message “File not found”

- This is irreversible

Records Center Implications:

- All content organizer rules will have incorrect URL’s to the destination library, so these all need to be updated

- A time consuming change if you have a lot of rules

- Any document library/records library with retention schedules will be irreversibly broken in that you can no longer manage the schedule. All libraries will need to be re-created, content migrated, and retention schedules added

- The symptoms of this are detailed below in section “Broken Records Library Symptoms”

- I have found no way to get around this other than recreating all libraries!!!

- A very time consuming change if you have a lot of libraries, content, or complex retention schedules

- Note: to delete record libraries you need to remove all records, then run the "Hold Processing and Reporting" timer job in Central Administration. When this is complete, you can delete the records library. Thanks to Michal Pisarek on MSDN for this one.

Broken Records Library Symptoms

When trying to manage the retention settings on the records library I see the error:

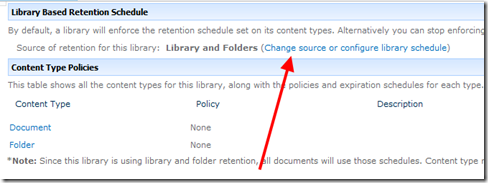

1. Click on Change source or configure library schedule:

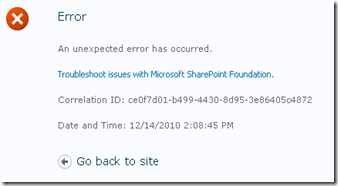

2. An error occurs:

3. And in the logs (nothing very helpful):

Exception Thrown: StartIndex cannot be less than zero.

Parameter name: startIndex Inner Exception: StartIndex cannot be less than zero.

Parameter name: startIndex

Recommendation

If you are using a single records center for managing retention, putting the records center in it’s own site collection is a good idea. This also adds isolates the content from a security perspective. You can always manually add links to the record center where necessary for people that need to access it from the main application.

Plan the Information Architecture up front – this one needs a good home from that start.

Tuesday, December 14, 2010

Delete document library option missing on settings page

You can’t delete a document library if it contains records. This is a sensible feature considering that documents declared as records would logically have some retention requirement…

To delete:

- Undeclare your records or move them to another location

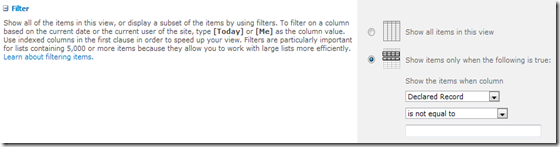

- If you’re working with a large document library with many folders etc. you could create a “Records View”, by filtering out any documents where the “Declared Record” field is not blank:

- When all are deleted/moved, the delete option appears again in the settings page

- Delete the document library

Monday, November 15, 2010

PowerShell: file renaming en-masse

The file name is invalid or the file is empty. A file name cannot contain any of the following characters: \ / : * ? " < > | # { } % ~ &But what if you have hundreds or thousands of files that DO include one or more symbols, and you need to move them onto SharePoint?

The following PowerShell script will replace a string in a filename with another string you provide. It doesn’t alter file extensions or folders. The script can be executed with command line parameters, however if they are not provided, it will prompt you for input. The following parameters are used:

- The directory e.g. “C:\test”

- The text to find e.g. “&”

- The replacement text e.g “and”

- An optional switch that specifies whether to recurse through subfolders: –recurse

- fileRenamer.ps1 “C:\test” “&” “and” –recurse

Error Handling

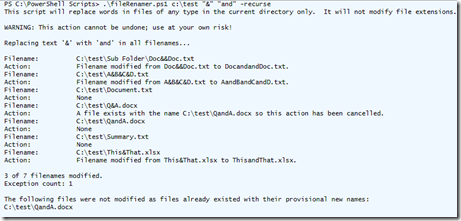

The only issue this script handles is when the provisional new file name for a file already exists e.g. you are changing Q&A.doc to QandA.doc, and this filename is already in use. In this instance, the script will notify the user that the rename operation has been cancelled and summary information is added to the end of the script.Script Output

The script prints its progress to the console, and also provides some summary information at the end:

Disclaimer

Use at your own risk. I will not be held responsible for any actions and/or results this script may cause. If you choose to use this script; test it first, and only run on directories/files with backups as the implications of renaming large numbers of files are serious and the operation cannot be undone.The Code

Paste this into a new file and save it as “fileRenamer.ps1” or similar.param(

[switch]$recurse,

[string]$directory = $(read-host "Please enter a directory"),

[string]$find = $(read-host "Please enter a string to find"),

[string]$replace = $(read-host "Please enter a replacement string")

)

# Startup text

echo "This script will replace words in files of any type. It will not modify file extensions."

echo ""

echo "WARNING: This action cannot be undone; use at your own risk!"

echo ""

# Setup variables

$modifiedcounter = 0

$counter = 0

$exceptioncounter = 0

$exceptionfilenames = ""

$files = ""

echo "Replacing text '$find' with '$replace' in all filenames..."

echo ""

if ($recurse)

{

# Grab all files recurse

$files = get-childitem $directory -include *.* -recurse

}

else

{

$files = get-childitem $directory *.*

}

foreach ($file in $files) {

$filename = $file.Name

# Only run if this is a file

if ($filename.IndexOf('.') -gt 0)

{

$name_noextension = $filename.SubString(0, $filename.LastIndexOf('.'))

$extension = $filename.SubString($filename.LastIndexOf('.'), $filename.Length-$filename.LastIndexOf('.'))

echo ("Filename: " + $file.FullName)

#echo "Extension removed: $name_noextension"

#echo "Extension: $extension"

# If there is a match then attempt to rename the file

if ([regex]::IsMatch($name_noextension, ".*$find.*")) {

# Change the filename

$name_noextension = $name_noextension.Replace($find, $replace);

$newname = $name_noextension += $extension

#echo "New name: $newname"

# Test to see whether a file already exists

if (Test-Path ($file.DirectoryName + "\" + $newname))

{

# A file already exists with that filename

echo ("Action: A file exists with the name " + ($file.DirectoryName + "\" + $newname) + " so this action has been cancelled.")

$exceptioncounter++

$exceptionfilenames += ($file.DirectoryName + "\" + $newname)

}

else

{

# Rename the file

rename-item $file.FullName -newname $newname

echo "Action: Filename modified from $filename to $newname."

$modifiedcounter++

}

}

else

{

echo "Action: None";

}

$counter++

}

}

# Output the results

echo ""

echo "$modifiedcounter of $counter filenames modified."

echo "Exception count: $exceptioncounter"

# If there were exceptions then output that as well

if ($exceptioncounter -gt 0)

{

echo ""

echo "The following files were not modified as files already existed with their provisional new names:"

echo $exceptionfilenames

}

Read-host "Press enter to quit"